ESnet’s goal is to create the best possible network environment for data-intensive, distributed scientific research projects across the DOE ecosystem. Achieving that goal requires strong co-design partnerships with various stakeholders. This is a primary focus of our Science Engagement team, but multiple departments within ESnet also actively participate in these collaborative efforts, which range from engaging with individual principal investigators to conducting the formal Requirements Review program. For this program, ESnet staff meet with scientists and program managers from each of the six DOE Office of Science programs every few years. The outcomes from each review are published in a comprehensive report with multiple case studies.

The following projects illustrate ESnet’s efforts to create an environment in which scientific discovery is completely unconstrained by geography — and scientists can remain unburdened by the sophisticated network infrastructure underlying their work.

Speeding Understanding of How Nuclei Decay

The Facility for Rare Isotope Beams (FRIB) at Michigan State University (MSU) enables scientists to study the decay of exotic, short-lived isotopes. These experiments generate massive datasets (reaching terabytes) as scientists attempt to reconstruct the decay pathways and gain insights into nuclear structure. The challenge lies in rapidly analyzing this data to keep pace with experimental activities.

In spring 2023, FRIB was connected via two 100 Gigabit per second (Gbps) circuits to ESnet6. In fall 2023, a demonstration involving the FRIB Decay Station Initiator (FDSi) was staged between scientific software and IT groups at FRIB, MSU IT, Berkeley Lab, and ESnet. FDSi is a device supported through collaboration among multiple groups, including FRIB, the University of Tennessee, Knoxville, Argonne National Laboratory, and Oak Ridge National Laboratory.

The demonstration involved collecting data at FDSi, using high-speed technology components to transfer that data to NERSC for analysis, and then returning it to FRIB. ESnet staff helped FRIB to specify, install, configure, and operate a Data Transfer Node (DTN) running Globus software that was capable of performing the data transfer immediately after the experiment concluded. The demonstration showed that it was possible to transfer 13 terabytes of data across the country at speeds of 4 TB per hour, analyze the data using NERSC resources in approximately 90 minutes, and send the data back to FRIB.

This instance was the first time at FRIB that scientists used an analysis model to take advantage of capabilities provided at other locations outside MSU to do data analysis faster than could be done locally. Scientists generating terabytes of data at FRIB are enthusiastic about the network’s future potential for enabling faster analysis.

Co-Designing a Fusion Superfacility

Scientists at the DIII-D National Fusion Facility need to analyze huge amounts of data from their experiments in order to make quick adjustments and speed up the development of fusion energy as a viable power source. To meet this challenge, a multi-institutional team at DIII-D collaborated with staff at NERSC and ESnet to develop a “Superfacility”: a seamless connection between experimental resources at DIII-D in San Diego and HPC facilities at NERSC in Berkeley, California, via ESnet6.

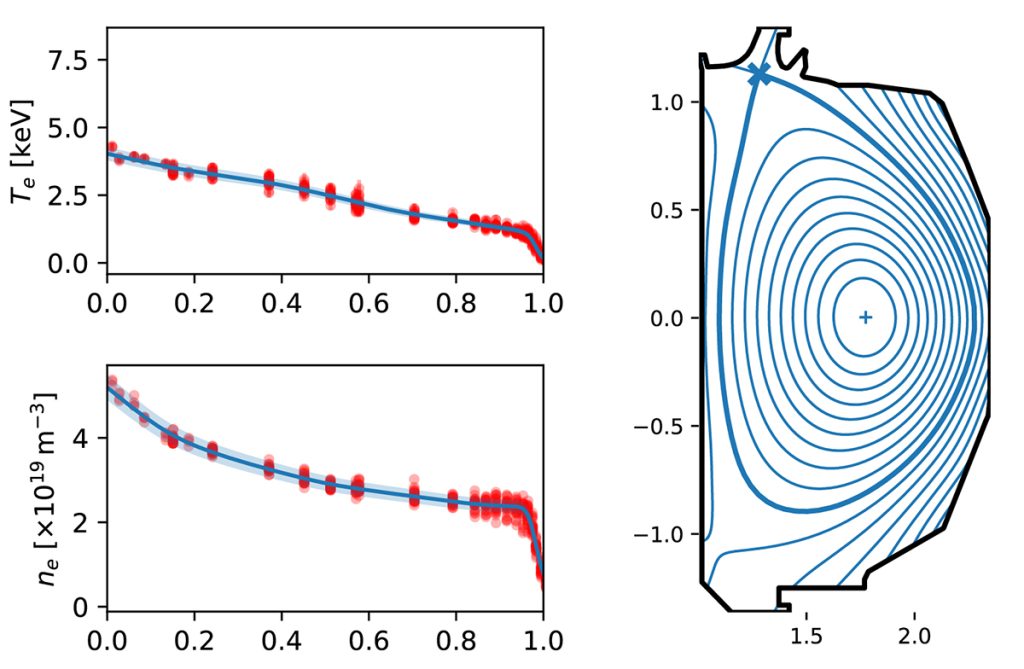

The device at the heart of DIII-D produces plasmas to study nuclear fusion. During experimental sessions, the research team studies the behavior of short plasma discharges called “shots,” typically performed at 10- to 15-minute intervals, with each producing many gigabytes of data captured by nearly 100 diagnostic and instrumentation systems. The intervals between shots allow time for adjustments of experiment parameters to address any issues or evaluate how specific parameter settings affect plasma behavior.

Previously, analyzing data between experimental “shots” was a slow, manual process. The new Superfacility utilizes a workflow called CAKE (Consistent Automated Kinetic Equilibria) to automate this analysis. By running CAKE on NERSC via ESnet, researchers decreased analysis time by 80%, going from 60 minutes to just 11 minutes.

This massive speed increase means adjustments can be made within the experiment timeframe, dramatically accelerating the pace of research. It also created a vast database of high-fidelity results that benefits DIII-D users and the global push for fusion energy. Making effective use of remote computing via ESnet has resulted in significant qualitative and quantitative improvements for this particular workflow while also offering additional scientific promise as the multi-facility workflow model is used to enhance additional codes.

Labeling Network Traffic for Application Behavior Analysis

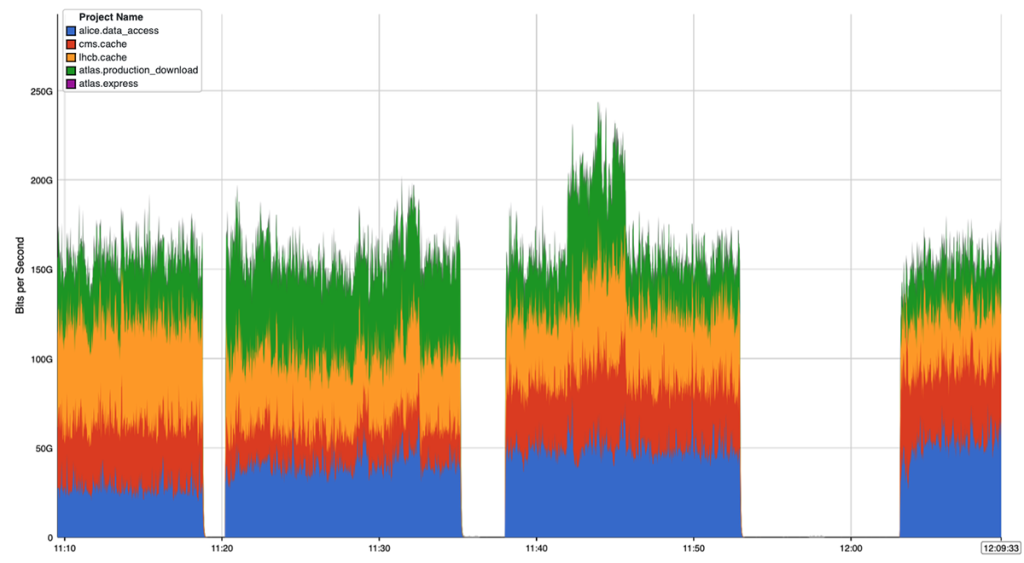

The LHC experiments make extensive use of high-performance networking in conducting their data analysis. The global Worldwide LHC Computing Grid (WLCG) is a very complex system, with more than 170 computing centers located in 40-plus countries contributing to simulation, data analysis, test and measurement, and related activities. In order to understand the ways in which the WLCG and other science projects use high-performance networks, ESnet and the other research and education networks (RENs) routinely collect network traffic statistics. However, network traffic statistics do not contain sufficient detail to understand application and workflow semantics (e.g., whether a data transfer is part of a production compute job, an analysis compute job, or a simulation compute job).

In order to identify the higher-level semantics of the network traffic in science workflows, staff from the LHC experiments and ESnet collaborated on a method of identifying applications by marking packets (for IPv6) and sending metadata to a central collector (for IPv4). These capabilities were tested in a demonstration by a multi-institutional team including staff from CERN, ESnet, SLAC, Jisc, the University of Michigan, the University of Nebraska, the University of Victoria, the Karlsruhe Institute of Technology (DE-KIT), and the Starlight exchange at the SC23 conference. The collected data showed the ability to determine higher-level workflow semantics from the collected statistics.

Analyzing the pattern of traffic flows in detail is critical for understanding how the various complex systems developed are actually using the network. Labeling traffic to indicate the user community and application workflow to which it belongs helps network engineers better understand the purpose of specific data transfers. This capability is especially important for sites that support many simultaneous experiments’ workflows, in which any worker node or storage system may quickly change between different users. With a standardized way of marking traffic, any intermediate network or end-site could quickly provide detailed visibility into the nature of the science traffic running to and from their site. This information is highly valuable for network optimization, capacity planning, service design, and other important activities.

Enabling Real-Time Streaming of Instrument Data

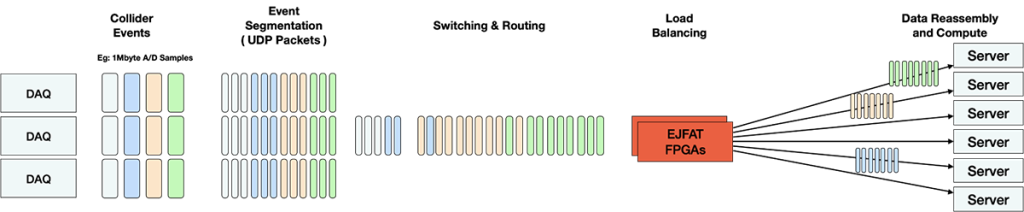

Codesigned with Thomas Jefferson National Accelerator Facility, the ESnet-JLab FPGA Accelerated Transport device uses traffic-shaping and load-balancing Field Programmable Gate Arrays that are designed to allow data from multiple types of scientific instruments to be streamed and processed in real time (or near real time) by multiple high-performance computing (HPC) facilities.

DOE operates several large science-instrument facilities that depend on time-sensitive workflows, including particle accelerators, X-ray light sources, and electron microscopes, all of which are fitted with numerous high-speed A/D data acquisition systems (DAQs) that can produce multiple 100- to 400-Gbps data streams for recording and processing. To date, this processing has been conducted on enormous banks of compute nodes in local and remote data facilities.

EJFAT (pronounced “Edge-Fat”) is a tool for simplifying, automating, and accelerating how those raw data streams are quickly turned into actionable results. It could potentially work for all such instruments with time-sensitive workflows. ESnet and NERSC were awarded a Laboratory Directed Research and Development (LDRD) grant from the DOE to find a way to show the resilience of this approach.

The keystone of EJFAT is the joint development of a dynamic compute work Load Balancer (LB) of UDP streamed data. The LB is a suite consisting of FPGAs executing the dynamically configurable, low fixed latency LB data plane featuring real-time packet redirection at high throughput, and a control plane running on the FPGA host computer that monitors network and compute farm telemetry in order to make dynamic decisions for destination compute host redirection.

In 2023, the EJFAT collaborators were focused on developing the EJFAT control plane and deploying it on the EJFAT testbed to allow for multi-facility testing. To establish the EJFAT testbed, a Layer 3 Virtual Private Network (L3VPN) was instantiated on the ESnet6 infrastructure, with the intention of connecting more DOE labs for both DAQ sources, as well as LCFs for compute resources.

Supporting Field Science Research Using Private Cellular Towers

Field science researchers for Earth systems, environmental, and energy-related applications are deploying an increasing number of sensors that are producing ever-larger amounts of data. They are also planning their use for workflows that will tie these sensor systems into DOE’s HPC and other data-analytics resources and user facilities. To ensure ESnet’s capabilities and services can support these emerging, rapidly growing needs, ESnet has deployed a novel prototype for an affordable, flexible, private cellular station to bring connectivity to sensor sites in remote, resource-constrained conditions. In 2023, a team from ESnet, Berkeley Lab’s Earth and Environmental Sciences Area, and Argonne National Laboratory deployed the first instance of such a tower outside Crested Butte, Colorado, at an altitude of approximately 3,500 meters. The tower, equipped with cellular access points, edge computing capabilities, and satellite connectivity, connects to sensor systems deployed as part of the DOE’s Surface Atmospheric Integrated Laboratory (SAIL) program.

SAIL seeks to measure the main atmospheric drivers of water resources, including precipitation, clouds, winds, aerosols, radiation, temperature and humidity. The tower significantly enhances data backhaul capabilities, moving beyond the limitations of sparse commercial cellular coverage and manual data collection.

In keeping with ESnet’s vision of unshackling scientific progress from geographic limitations, enabling researchers to connect to critical resources and collaborate regardless of location, we are exploring a portfolio of private 5G, LoRA, and non-terrestrial network systems to test and extend the reach and ease of field science.