ESnet’s goal is to create the best possible network environment for data intensive, distributed research projects across the DOE ecosystem. Achieving that goal requires strong “co-design” partnerships with various stakeholders. This is a primary focus of our Science Engagement team, but multiple departments within ESnet also actively participate in these collaborative efforts, which range from engaging with individual principal investigators to conducting the formal Requirements Review program. The following projects provide a sampling of ESnet’s efforts to create an environment in which scientific discovery is completely unconstrained by geography — and scientists can remain unburdened and unaware of the sophisticated network infrastructure underlying their work.

The Engagement and Performance Operations Center (EPOC): A Toolbox for R&E Networks

ESnet has been collaborating with the Texas Advanced Computing Center (TACC) as co-PI to establish the Engagement and Performance Operations Center (EPOC), using an NSF grant totaling $3.5 million. (Originally intended for three years, the grant’s duration was extended to five years due to pandemic-related delays.) EPOC is designed to provide researchers — and the IT engineers supporting them — with a holistic set of tools and services needed to debug performance issues and enable reliable and robust data transfers. The project scope supports five main activities:

- Providing “roadside assistance” through a coordinated Operations Center to actively resolve network performance issues with end-to-end data transfers.

- Conducting application deep dives to establish closer collaboration with application communities, understanding end-to-end research workflows, and assessing bottlenecks and potential capacity problems.

- Enabling network analysis using the NetSage monitoring suite to proactively discover and resolve performance issues. NetSage is currently deployed at eight locations within networks, campuses, and facilities.

- Coordinating training activities to ensure effective use of network tools and science support.

- Emphasizing data mobility, through the Data Mobility Exhibition effort

EPOC’s user data provides deeper insight into the needs of scientific research collaborations and thus transforms ESnet’s ability to achieve better data transfers. In 2022, the ESnet/TACC EPOC team gave 50 talks at virtual and in-person events; addressed 88 roadside assistance tickets, bringing the total the project has addressed since the start to 277; and completed and published the results of six deep dive events.

Designing the Superfacility Networking Approach

The Superfacility Project at Berkeley Lab, which began in 2019 and concluded in 2022, has been a strategic priority for ESnet. The superfacility model is designed to leverage high-performance computing (HPC) and high-speed networks for experimental science. More than simply a model of connected experiment, network, and HPC facilities, the superfacility program envisioned an integrated ecosystem of compute, networking, and experimental infrastructures, supported by common, shared software, tools, and expertise needed to make those connected facilities easy to use.

A key component of the Superfacility Project has been in-depth engagements with science teams that represent challenging use cases across the DOE Office of Science. The goal was to develop and implement automated pipelines that analyze data from remote facilities at large scale without routine human intervention. In several cases, the project team went beyond demonstrations and now provides production level services for the experiment teams, which include:

- Dark Energy Spectroscopic Instrument (DESI): Automated nightly data movement from telescope to NERSC and deadline-driven data analysis.

- Linac Coherent Light Source (LCLS): Automated data movement and analysis from several experiments running at high datarate end stations.

- National Center for Electron Microscopy (NCEM): Automated workflow pulling data from the 4D STEM camera to the Cori supercomputer at NERSC for near-real-time data processing.

- LUX-ZEPLIN experiment (LZ): Automated 24/7 data

analysis from the dark matter detector

In the next few years, ESnet will be applying what it has learned from the Superfacility Project implementations to operationalize the DOE’s Integrated Research Infrastructure initiative.

Bolstering Data Acquisition and Transfer for GRETA

GRETA (Gamma Ray Energy Tracking Array) is a new instrument designed to reveal novel details about the structure and inner workings of atomic nuclei, elevating our understanding of matter and the stellar creation of elements. GRETA will be installed at the DOE’s Facility for Rare Isotope Beams (FRIB) located at Michigan State University in East Lansing; it will go online in 2024.

GRETA will house an array of 120 detectors that will produce up to 480,000 messages per second — totaling 4 gigabytes of data per second — and send the data through a computing cluster for real-time analysis. Although the data will mostly traverse a network of about 50 meters for on-the-fly analysis, the system has been designed to easily send data to more distant HPC systems as necessary.

Working with the Berkeley Lab nuclear physicists building the GRETA experiment, ESnet is:

- Designing the computing environment to support the experiment’s operation and data acquisition; and providing the system architecture, performance engineering, networking, security, and other related expertise.

- Advancing multiple key high-performance software components of the data acquisition system: the Forward Buffer, Global Event Builder, Event Streamer, and Storage Service.

- Developing several simulations of the instrument’s various components, allowing for functional and performance testing.

In CY2022, ESnet completed the initial implementation of the Event Streamer; it is now ready for the integration phase.

Strengthening the Energy Grid with the ARIES Project

The National Renewable Energy Laboratory’s (NREL) Advanced Research on Integrated Energy Systems (ARIES) “digital twin” project uses ESnet to unite research capabilities at multiple scales and across sectors, creating a platform for understanding the full impact of energy systems integration. ARIES will make it possible to understand the impact and get the most value from the millions of new devices that are connected to the grid daily, such as electric vehicles, renewable generation, hydrogen, energy storage, and grid-interactive efficient buildings.

In early December 2021, ARIES researchers teamed up with the Pacific Northwest National Laboratory (PNNL) and ESnet staff to demonstrate these capabilities during a live demonstration of a microgrid modeling experiment. This multi-laboratory demonstration showed that advanced control systems in the Cordova, Alaska, microgrid could allow it to maintain power to critical resources such as the hospital and the airport during an extreme weather event and loss of hydropower resources.

During this tightly coupled experiment, NREL simulated the Cordova microgrid while PNNL simulated the advanced control systems. Using its On-demand Secure Circuits and Advance Reservation System (OSCARS), ESnet provided a reliable, low-latency connection so that research equipment at the two laboratories could exchange frequent command and control information.

The round-trip latency between NREL and PNNL was 24 milliseconds, with a variance of approximately 0.02 milliseconds. The average latency in the original SuperLab demonstration was about 27 milliseconds,

with a variance of about 11.5 milliseconds. The very low latency variance was vital to the project’s success and made exchanging command-and-control information between the two laboratories nearly deterministic.

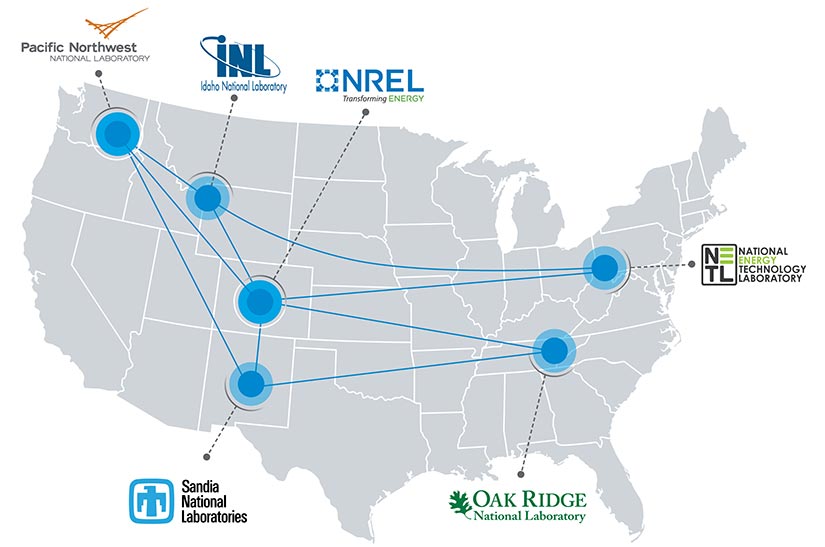

ARIES experiments continue and are being expanded to explore new use-case scenarios and to involve more national laboratory participants. A quad lab experiment is being planned in 2024 for NREL, Idaho National Laboratory, Sandia National Laboratory, and National Energy Technology Laboratory; ESnet is providing both networking and technical consulting capabilities to support these efforts.

Shaking Up Data Sharing for EQSIM

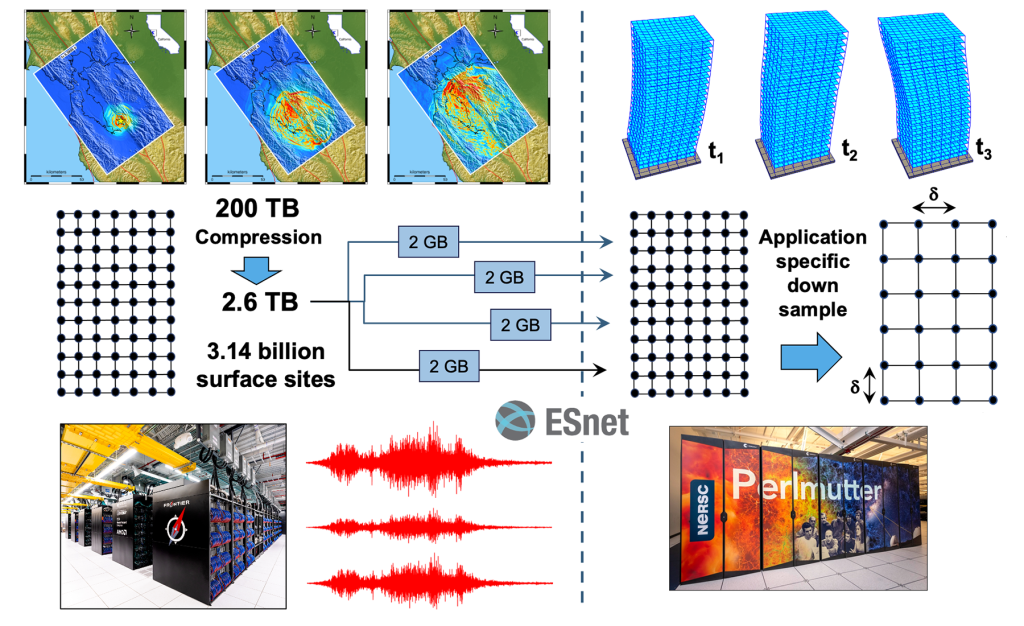

EQSIM — one of the projects backed by the DOE’s Exascale Computing Project — has been pioneering advancements since 2017. Its focus is on assisting researchers in comprehending the impact of seismic activity on the structural soundness of buildings and infrastructure. The EQSIM software was built and tested using the Summit supercomputer at Oak Ridge National Laboratory and the Cori and Perlmutter systems at Berkeley Lab. It uses physics-based supercomputer simulations to predict the ramifications of an earthquake on buildings and infrastructure and create synthetic earthquake records that can provide much larger analytical datasets than have been previously available. Thanks to the new GPU-accelerated HPC systems, the EQSIM team was able to create a detailed, regional-scale model that includes all of the necessary geophysics modeling features, such as 3D geology, earth surface topography, material attenuation, non-reflecting boundaries, and fault rupture.

ESnet was instrumental in enabling the sharing of the massive amounts of data needed to design and operate the EQSIM software across multiple platforms, noted EQSIM principal investigator David McCallen, a senior scientist in Berkeley Lab’s Earth and Environmental Sciences Area. “EQSIM workflow is designed around exploitation of multiple platforms and efficient data transfer,” McCallen said during the ESnet6 unveiling in October 2022. “ESnet is the glue that stitches it all together as we transfer our simulation data back and forth from one site to the other.”

Exploring Networking at the Wireless Edge

Wireless capabilities such as private 4G/5G, satellite, and Internet of Things (IoT) mesh network concepts are making it possible for a wide variety of field scientists to deploy mobile and remote sensor systems in ways never before possible. The ability to simplify deployment of ESnet services beyond the optical edge and, using advanced wireless technologies, make it possible for DOE researchers to transfer data seamlessly to DOE HPC centers and user facilities will be a powerful enabler for future science breakthroughs across a wide range of disciplines.

In 2022, ESnet procured and deployed Nokia Digital Automation Cloud private 4G Citizens Band Radio Service (CBRS) hardware — which will be upgraded to the 5G standard in CY2023 — to explore scientific use cases, identify future service needs supporting “wireless edge” field science applications, and conduct wireless networking and edge compute research. The CBRS system is being deployed as a multi-site federation, supporting activities at Berkeley Lab in Berkeley, California, as well as Earth and Environmental Sciences Area (EESA) research in a remote area near Mt. Crested Butte, Colorado.

This EESA collaboration has required the deployment of the system using a combination of wireless technologies to support backhaul of sensor data from a sensor field in the Rocky Mountains back to NERSC, including CBRS, Starlink satellite service, Long Range (LoRa) sensor mesh networking, remote off-grid Wifi, and commercial cellular service. ESnet will build upon this capability in future years as part of a focused effort to identify the correct services and technologies to best facilitate the science programs we support.

Accelerating LUX-ZEPLIN’s Search for Dark Matter

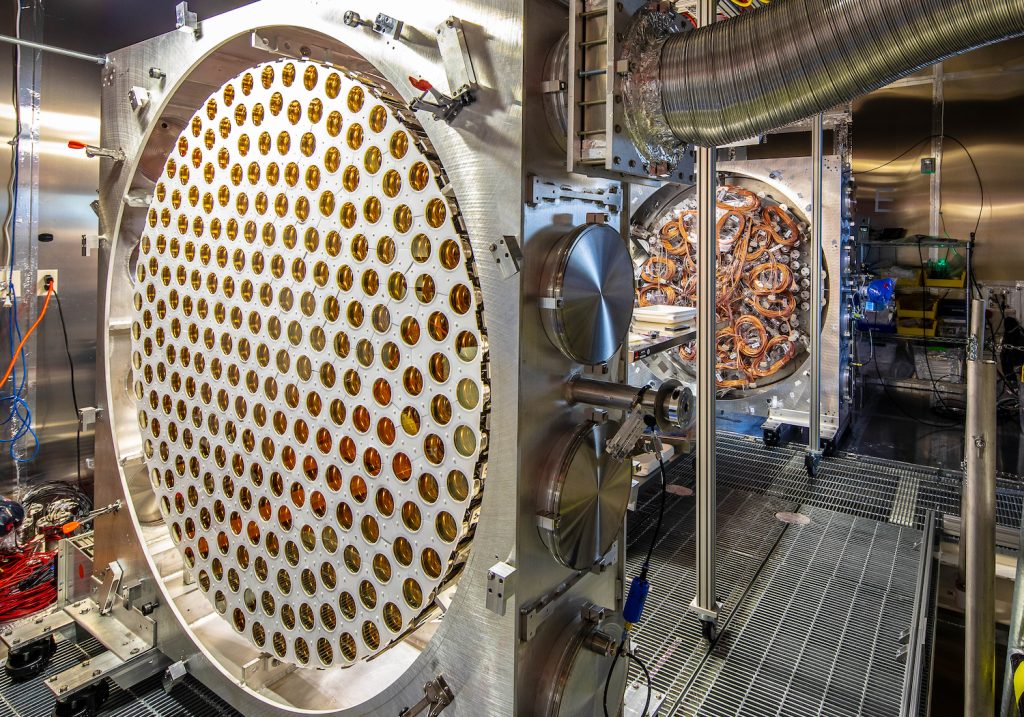

ESnet is playing a critical role in facilitating the burgeoning LUX-ZEPLIN (LZ) experiment being used to help researchers find dark matter in the Universe. Located in the Sanford Underground Research Facility (SURF) in South Dakota, the uniquely sensitive LZ detector is designed to detect dark matter in the form of weakly interacting massive particles. Led by Berkeley Lab, the experiment has 34 participating institutions and more than 250 collaborators.

Data collection from the LZ detector began in 2021; at present, about 1 petabyte of data is collected annually. The data pipeline runs 24/7, relying on ESnet and the National Energy Research Scientific Computing Center to consistently manage and transfer that data to multiple facilities. Each day’s batch of raw data is initially sent up to the SURF surface facility, then on to NERSC via ESnet6 for processing, analysis, and storage. The raw and derived data products are also sent to a data center in the United Kingdom for redundancy.

In 2022, the LZ dark matter detector passed a check-out phase of startup operations and delivered its first results. LZ researchers reported that, as a result of that initial run, LZ is currently considered the world’s most sensitive dark matter detector.

Replicating a Massive Climate Data Set

In 2022, ESnet enabled the replication of a very largescale data set of international importance to two HPC facilities. The more than 7 petabytes of climate data belonged to the Earth System Grid Federation (ESGF), a peer-to-peer enterprise system that develops, deploys, and maintains software infrastructure for the management, dissemination, and analysis of model output and observational data. ESGF’s primary goal is to facilitate advancements in Earth system science. It is an international, interagency effort led by the DOE and co-funded by the National Aeronautics and Space Administration, National Oceanic and Atmospheric Administration, National Science Foundation, and several international laboratories.

A multi-lab team replicated more than 7 PB of ESGF data from Lawrence Livermore National Laboratory (LLNL) to the Argonne Leadership Computing Facility (ALCF) and Oak Ridge Leadership Computing Facility (OLCF) over ESnet6. The team used the Globus data transfer platform to ensure that the transfer occurred reliably and with high performance. The data sets were transferred from LLNL to either the ALCF or the OLCF and then replicated between them to ensure that both sites successfully transferred a complete copy of the data. The existence of these replicas provides several advantages, including resilience and protection against catastrophic data loss, and improved data proximity to high performance computing centers.