ESnet’s research and development activities are integral to ESnet’s mission to push the boundaries of networking and meet the science needs of tomorrow. Since 1989, our traffic has grown an average of 55% every year. ESnet relies on continuous staff innovation as well as focused applied research and development to increase the network’s efficiency in multiple areas. Our teams develop, test, and deploy new tools, protocols, and techniques to support the expanding needs of DOE scientists.

SENSE-ing How to Direct Network Resources

Most applications treat the network as a “black box,” sending packets without insight into network conditions or the ability to request resources. For complex, time-sensitive scientific workflows, ESnet offers application-driven orchestration — enabling applications to negotiate with the network for priority, bandwidth, and performance tuning.

ESnet’s OSCARS and Orchestrator tools already provide on-demand network provisioning in production. The SENSE (SDN for End-to-end Networked Science at the Exascale) project, still in the research phase, takes this further by building real-time models of networks, end systems, and data-transfer services. Science workflows can use SENSE to discover capabilities, provision resources, and dynamically adjust operations across multiple domains.

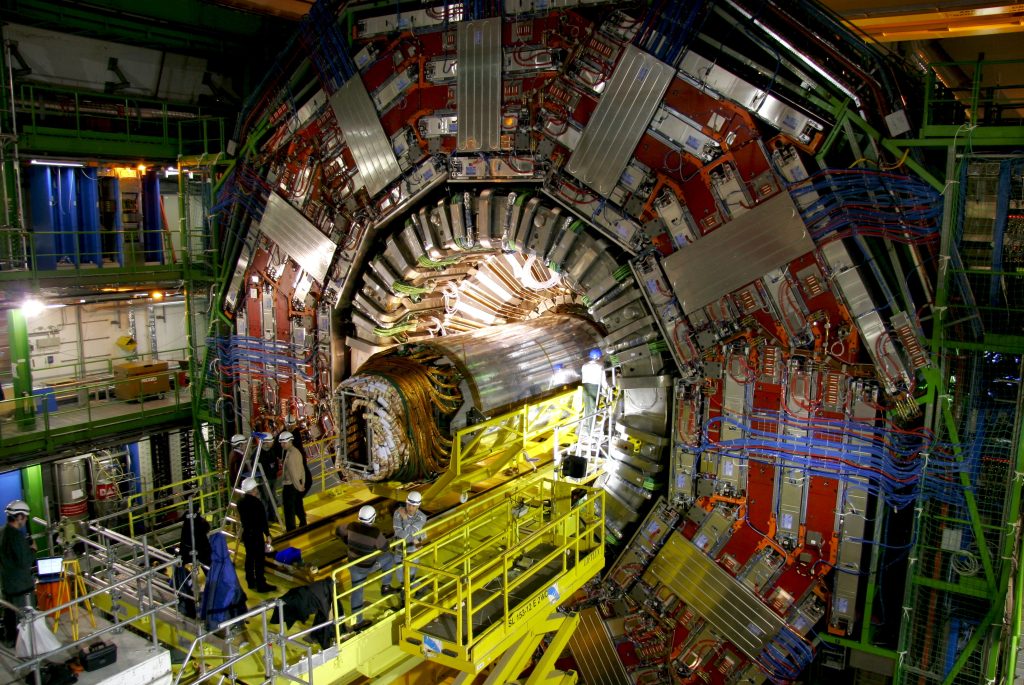

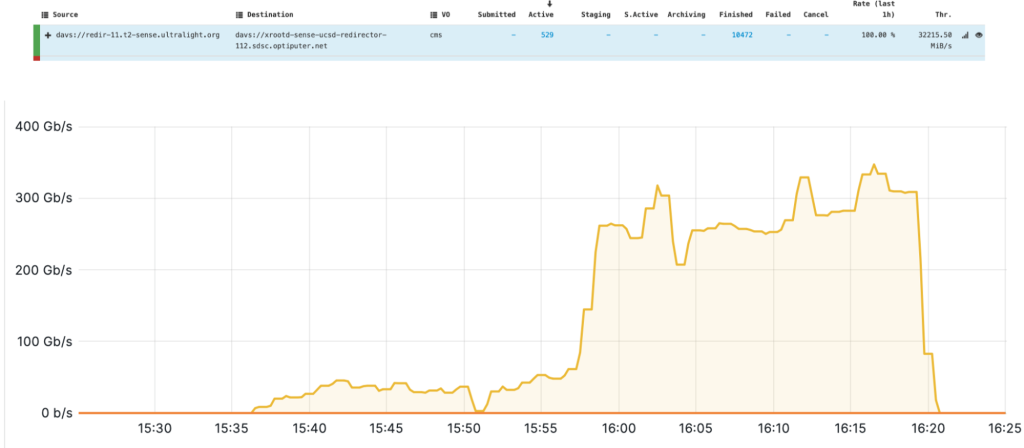

In 2024, the SENSE team focused on supporting the Large Hadron Collider (LHC) Compact Muon Solenoid (CMS) workflows using the Rucio/FTS/XRootD data management stack. The SENSE project integrated with Rucio to identify high-priority transfers, dynamically allocating network and end-system resources and tuning the data-transfer stack in real time.

During the LHC Data Challenge 2024 and demos at the SC24 conference, SENSE-enabled workflows achieved 330 Gbps sustained transfers between Caltech’s CMS Tier 2 storage and UC San Diego — close to the 400 Gbps target for the coming High-Luminosity LHC era. Deployments now span multiple LHC Tier 1 and Tier 2 sites, with more planned for 2025. Work also began on integrating SENSE with Globus-based transfers, which will extend deterministic, lifecycle-managed data movement to most large-scale science workflows.

In-Network Data Caching Reduces WAN Traffic

Scientific experiments and simulations generate large datasets that are often accessed repeatedly by researchers in the same region. These repeated transfers can unnecessarily consume bandwidth, increase congestion, and slow data access. In-network data caching stores frequently used datasets at strategic points in the network, reducing redundant transfers, lowering latency, and improving application performance.

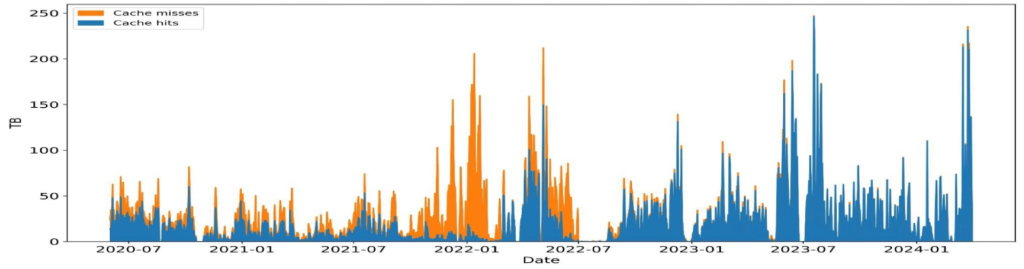

In 2024, ESnet collaborated with two High Energy Physics experiments, US CMS and US ATLAS, for the LHC and Open Science Data Federation (OSDF), to deploy five regional caching nodes, with the objective of understanding network utilizations and characteristics. OSDF caches in Amsterdam and London primarily support DUNE and LIGO. US CMS caches were deployed in Southern California (2 PB), Chicago (340 TB), and Boston (170 TB), with the Boston node also serving US ATLAS.

Over 11 months, caching reduced WAN traffic by an average of 33 terabytes per day, with two-thirds of requests served locally and a 94% average hit rate. The SoCal, Chicago, and Boston caches cut WAN traffic by 69.3%, 48.4%, and 6.6%, respectively. Modeling shows doubling Chicago’s capacity could raise its hit rate to 80%. In 2025, ESnet will expand the study, converting single-service caches to multi-service platforms that can serve multiple science communities simultaneously, further enhancing efficiency and performance for data-intensive research.

Testing the Network Vitals

If ESnet is the “data circulatory system for science,” then it also requires regular check-ups. In 2024, ESnet advanced three major initiatives to deliver higher-fidelity, predictive, and AI-driven insights into network health.

High-Touch Services: The High-Touch project combines programmable hardware with ESnet-developed software to capture detailed flow and packet-level telemetry data. In 2024, the High-Touch team remediated existing deployments, expanded into ESnet’s European sites, and deployed a new Clickhouse database cluster to ingest and query flow data from all High-Touch nodes. This capability supported the LHC Data Challenge 2024, revealing lower-than-expected throughput in LHCONE flows without signs of packet loss, and helped to troubleshoot Vera Rubin Observatory transfers by identifying upstream packet reordering. It has also been an asset in security investigations, helping our customers get increased visibility and being able to track threat actor movements.

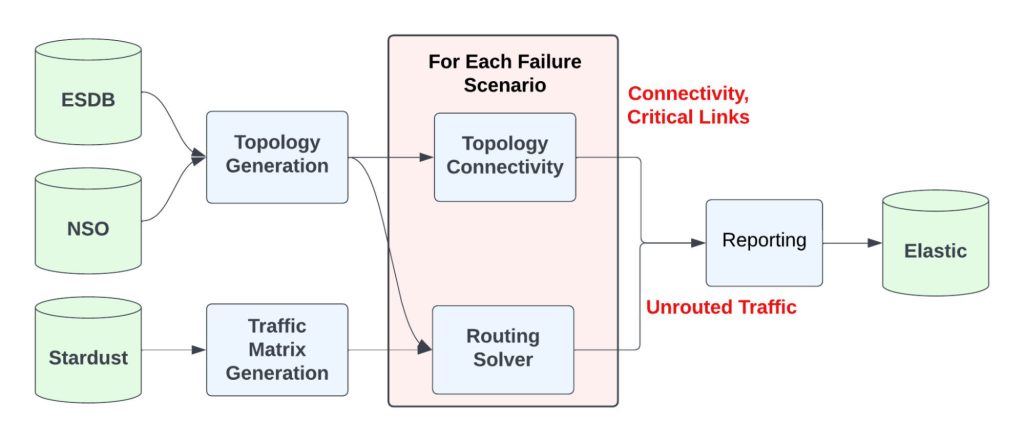

Modeling Network Bottlenecks: The Network Bottleneck and Capacity Prediction project team automated an end-to-end modeling pipeline — from traffic matrix generation to failure scenario analysis — using advanced solvers and optimization techniques. New tools now identify critical links under single- and dual-fiber cuts, integrate spectral graph theory for connectivity insights, and explore robust optimization to account for traffic and network uncertainties.

AI/ML-Driven Analysis: ESnet and our partner researchers at UC Santa Barbara collaborated to use netFound, a domain-specific network foundation model, to transform High-Touch telemetry data into actionable intelligence for predictive maintenance, anomaly detection, and flow control. In parallel, ESnet and Fermilab built deep-learning models to classify streaming vs. file-transfer traffic using only a few initial packets, achieving 93% accuracy and improving model transparency with interpretability tools.

Advancing IRI with GRETA/DELERIA

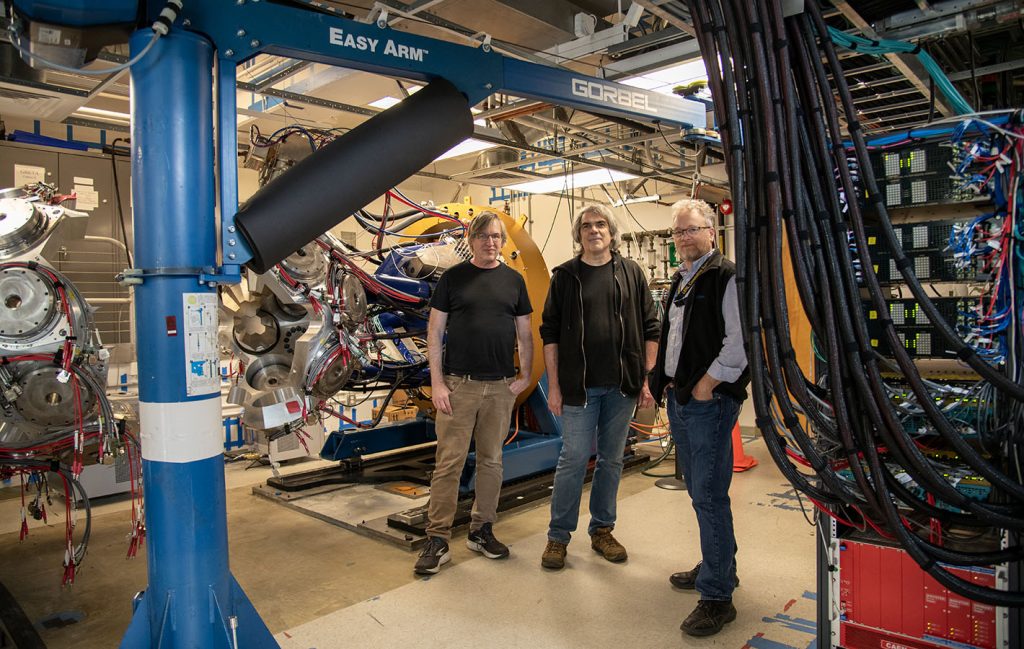

The Gamma Ray Energy Tracking Array (GRETA) is a next-generation gamma-ray detector offering order-of-magnitude sensitivity improvements over existing arrays, using segmented germanium crystals and advanced signal processing to track individual gamma-ray interactions. Led by Berkeley Lab, the GRETA project is a 413.3 project funded by the DOE Office of Science’s Nuclear Physics program in collaboration with Oak Ridge National Laboratory (ORNL), Argonne National Laboratory (ANL), and the Facility for Rare Isotope Beams (FRIB) at Michigan State University. ESnet’s contribution focuses on the data acquisition system, including architecture design, performance engineering, software development, and networking and security support.

In 2024, ESnet staff worked with Berkeley Lab’s Nuclear Sciences Division to integrate custom-developed components with GRETA’s Signal Decomposition software and computing cluster, optimizing performance to meet key performance parameters. The collaborators also completed the Distributed Event Level Experiment Readout and Analysis (DELERIA) project, which is part of Berkeley Lab’s Laboratory Directed Research and Development (LDRD) program and enables real-time streaming of event-level detector data over high-speed wide-area networks (WANs) directly to remote HPC facilities for immediate analysis. The DELERIA computing pipeline was deployed within the ESnet testbed systems in Berkeley (six nodes) and the Oak Ridge Leadership Computing Facility at ORNL (24 nodes). A study and subsequent tuning of the software provided valuable insights into the behavior of scientific data streaming workflows in a multi-institutional environment and high-latency networks.

DELERIA’s composable, reusable software components separate analysis, data transport, and control, enabling experiments — especially smaller collaborations — to leverage leadership-class computing without heavy software engineering investment, accelerating discovery across diverse scientific domains.

Supporting Innovative Experimentation through Testbeds

Testbeds are dedicated, controlled environments that allow researchers and engineers to experiment, validate, and refine new technologies without impacting production systems. For ESnet, testbeds are essential for advancing networking, computing, and data movement innovations that support the DOE’s science mission. They provide the ability to prototype high-performance solutions, test interoperability, and evaluate operational readiness before transitioning to production and deployment at scale. In 2024, ESnet operated and contributed to multiple advanced testbeds in partnership with DOE facilities, academia, and the global R&E community.

QUANT-NET

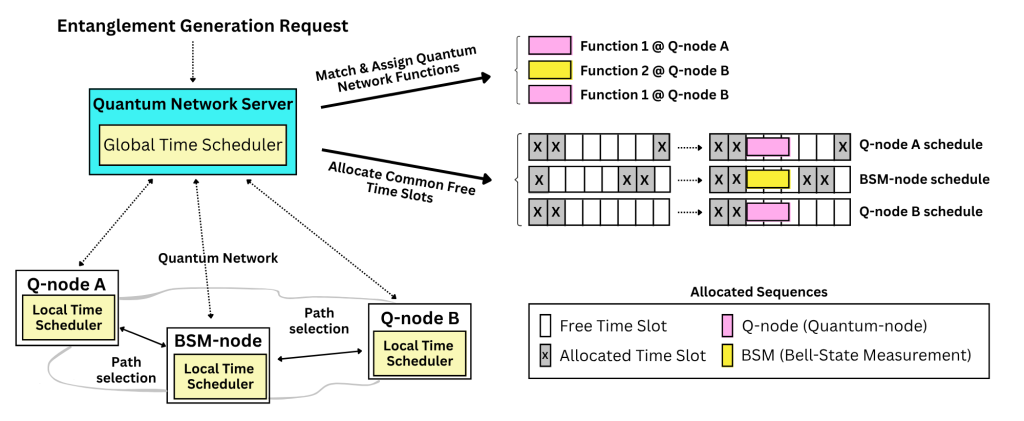

The Quantum Application Network Testbed for Novel Entanglement Technology (QUANT-NET) project, funded by DOE’s ASCR program since 2021, is led by ESnet and Berkeley Lab with partners from UC Berkeley, Caltech, and the University of Innsbruck. Its goal is to build a three-node distributed quantum computing network linking Berkeley Lab and two UC Berkeley sites via a 5 km entanglement-swapping optical fiber link, managed by a quantum network protocol stack.

In 2024, the team:

- Built a new ion-trap system at Berkeley Lab capable of consistently trapping a single ion. The next step is to extract and couple single photons from ions to fibers as the first step for quantum networking experiments.

- Automated a Hong-Ou-Mandel interferometer for high-fidelity Bell state measurements, a key step toward remote entanglement experiments.

- Developed a two-level control framework for quantum networks, designed specifically for the QUANT-NET testbed and can also be extended to support other types of quantum networks.

- Developed an ARTIQ-based real-time control framework for trapped-ion quantum networking.

These milestones have laid the groundwork for teleporting quantum logic gates between remote quantum nodes — a fundamental building block for quantum repeaters and distributed quantum computing.

ESnet Testbed

The ESnet Testbed provides networking, computing, and storage resources for experimentation with novel technologies relevant to DOE science. Located primarily at Berkeley Lab, it features a dedicated 400 Gbps link to Starlight in Chicago with connectivity to the International Center for Advanced Internet Research (iCAIR).

In 2024, ESnet added six new 400G-capable high-performance servers. Key projects using the ESnet testbed included:

- Naval Research Laboratory (NRL): Investigated network resilience during catastrophic events, culminating in a large SC24 demonstration spanning multiple federated testbeds.

- DELERIA: Tested high-latency streaming of GRETA instrument simulations (see above).

- SciStream: Led by ANL, this project developed secure, low-latency streaming for scientific workflows, demonstrated at SC24 using ESnet, SciNet, and iCAIR testbeds.

IRI Testbed and Testbed Next Generation (NG)

The IRI program requires shared resources to explore its three workflow patterns: Time-Sensitive, Data Integration–Intensive, and Long-Term Campaign. ESnet contributes networking, connectivity, and its own testbed resources to the IRI Testbed. In 2024, ESnet supported two IRI experiments — DELERIA and EJFAT — in collaboration with OLCF, demonstrating streaming of raw instrument data into high-performance computing HPC facilities over ESnet.

ESnet also began designing Testbed NG, a more capable successor to the current testbed, based on requirements from the Federated IRI Testbed white paper. The conceptual design was completed in 2024, with the final draft released in February 2025.

FABRIC

FABRIC (FABRIC is Adaptive ProgrammaBle Research Infrastructure for Computer Science and Science Applications) is a national-scale research testbed funded by the National Science Foundation (NSF). ESnet is a core partner, leading the Scientific Advisory Committee, managing colocation hosting, and providing Layer-1 connectivity using spectrum from the ESnet6 Optical Line System.

In 2024, ESnet’s FABRIC team:

- Supported 400 Gbps upgrades at CERN, New York, and Los Angeles.

- Delivered major FABRIC Control Framework Software updates, improving stability, automation, and traffic engineering capabilities.

- Integrated Ciena 8100 “travel nodes” into the previously all-Cisco FABRIC network, extending Network Service Orchestrator services to enable multi-vendor interoperability for live demonstrations.

- Advanced FABRIC Federation (FabFed) software, enabling automated multi-testbed/cloud experiments, including new hybrid-cloud topologies demonstrated at NSF MERIF 2024.

These contributions ensured FABRIC remains a versatile, cutting-edge platform for distributed science experiments, tightly integrated with ESnet’s production infrastructure.

Wireless Edge: Neutral-Host Multi-Core for Science Data Mobility

As DOE science increasingly moves into remote “greenfield” sites without robust communications infrastructure, ESnet’s Wireless Edge program is exploring cost-effective, high-performance wireless solutions to connect field researchers with DOE HPC and analysis resources.

ESnet Wireless Edge staff also focus on developing, enabling secure, seamless access for the research community even when they’re not in the field. In 2024, they deployed neutral-host multi-core capabilities at Berkeley Lab for evaluation. This approach allows DOE-owned Radio Access Network (RAN) equipment to simultaneously support multiple cellular networks — for example, commercial cellular providers and one or more lab or university private scientific data networks — on the same hardware, as a private cellular network modeled on Eduroam, the international Wi-Fi internet access roaming service.

At Berkeley Lab, ESnet deployed cellular access points each capable of serving up to five networks, improving in-building coverage and reducing infrastructure costs. This shared, multi-core approach promises more efficient spectrum use, lower deployment expenses, and expanded wireless reach for DOE science.