ESnet’s research and development activities are integral to our mission of advancing the capabilities of today’s networking technologies to better serve the science requirements of the future. Since the formation of ESnet in 1986, ESnet has observed an average network traffic growth of about 55% year on year since 1989. ESnet relies on continuous staff innovation as well as focused applied research and development to increase the network’s efficiency in multiple areas. We continually investigate, create, develop, test, and eventually transition to production the services, protocols, routing techniques, and tools necessary to meet the expanding needs of our user community of DOE scientists.

Orchestrating Domain Science Workflows with SENSE

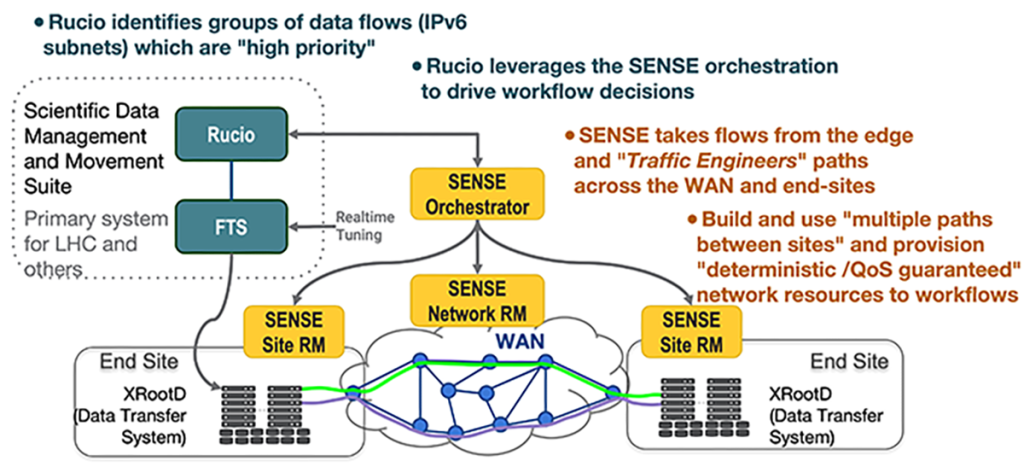

ESnet’s SENSE (SDN for End-to-end Networked Science at the Exascale) project developed an orchestration system that enables domain science applications to integrate discovery and provisioning of network resources into their workflow processing. Distributed domain science workflows can then interact with the network as a first-class schedulable resource, as they currently do for instruments, compute, and storage-system resources.

In 2023, ESnet focused on continuing to integrate SENSE into LHC Compact Muon Solenoid (CMS) workflows that use the Rucio/FTS/XRootD data management and movement system. The Rucio/FTS/XRootD data-transfer stack is used by multiple domain science projects that need to manage highly distributed, large-scale datasets. Although CMS compute system management provides deterministic resources for individual users, the data transfers and associated network services are unpredictable.

SENSE services integration is based on Rucio’s identification of dataflow groups that should have a higher priority, which is then reflected in network provisioning. This orchestration across Rucio data-transfer request processing and SENSE-driven network services also includes real-time, flow-specific tuning across all elements of the data-transfer stack, including the FTS and XRootD systems.

ESnet’s 2023 work on SENSE means that systems such as Rucio now have additional mechanisms to improve their users’ quality of experience through increased end-to-end performance, prioritization of activities, and enhanced accountability. From a networked cyberinfrastructure perspective, this enables a new class of fine-grained control of remote-systems interconnection. This will be increasingly important for workflows that require tight coupling across multiple distributed purpose-built facilities.

Analyzing Network Health

ESnet needs to deeply understand its network performance for both daily operations and long-term planning. Simply tracking basic metrics like link usage and outages isn’t enough. Sophisticated tools that offer detailed insights and predictive capabilities are crucial for real-time network management and making informed decisions about the future of ESnet.

High Touch Services

The ESnet High Touch project provides new network services through the use of programmable hardware, in the form of Field Programmable Gate Arrays (FPGAs), to collect and analyze network traffic. These services provide network telemetry, at both the flow and packet levels, at multi-100 Gigabit Ethernet speeds. Such measurements provide unprecedented visibility into network traffic for network engineering, cybersecurity, and networking research.

In 2023, ESnet completed the bulk of physical installations of the required servers with FPGA hardware at 40 locations throughout the ESnet6 footprint. The High Touch project team also designed and profiled a Clickhouse database solution to support the expected target of 1 trillion flow records. A proof of concept deployment has been completed.

To promote the use of the High Touch platform, ESnet has released the source code for the lower layers of the FPGA hardware functionality, as the open-source ESnet SmartNIC platform, available on GitHub. This release allows anyone to build their own platform designs using an FPGA Smart Network Interface Card (SmartNIC) to implement a variety of high-speed, high-performance network applications.

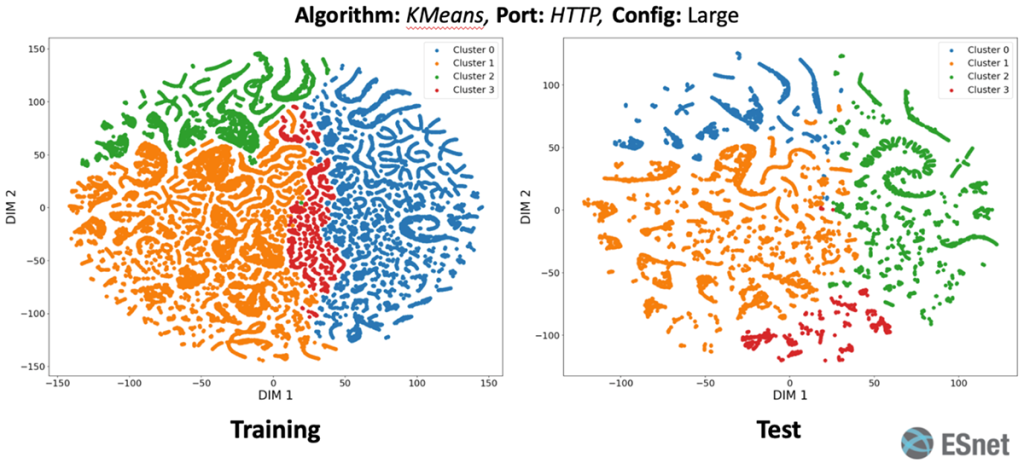

The Euclidean distance and well-separated colors suggest reliable clustering.

Network Bottleneck Modeling and Capacity Prediction

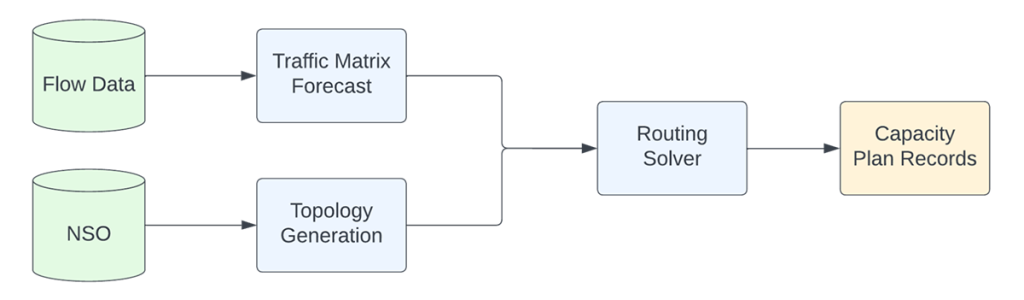

ESnet’s network planning used to rely on limited data and reactive strategies. Now, with vendor enhancements and better accessibility of metadata-enriched flow data through Stardust, ESnet can make strategic, data-driven decisions that are optimized for cost and latency and potentially Service-Level Agreement-aware. ESnet expects such a data-driven approach will improve user experience in terms of performance and resilience over the long term.

Initiated in 2023, the Network Bottleneck Modeling and Capacity Prediction project aims to provide insights into bottlenecks into ESnet’s production network and forecast the minimum capacity requirements for the medium term (three to six months) and long term (three to five years) in a systematic manner. This project not only models the network’s steady state but also simulates about 300 potential network-degradation scenarios. Each scenario is then analyzed using a linear program featuring millions of variables and constraints. As a result, the project enables automated yet rigorous network resilience analysis to be conducted that could not be done in the previous manual way. ESnet’s data scientists expect that by collaborating with the network operations team, the project will facilitate the enhancement of ESnet’s Site Resilience Program and integrate into the next-generation capacity-management system.

Ramping Up Network Data Services

ESnet’s primary focus has always been on providing excellent (reliable, high-speed, low-latency) network services to the DOE science community. However, the surge in data-intensive, distributed research has driven a shift toward integrating computational storage capabilities into the network, offering an even more powerful and efficient data services platform to support today’s complex scientific workflows.

In-Network Data Caching

In-network caching creates temporary replicas of popular datasets and stores them in the network local to wherever there is a high demand for them. This results in decreased traffic bandwidth demands on the network, as well as reduced data access latency for all applications, offering increased application performance. In-network data caching also allows data pools to be designed into the network topology with opportunities for traffic engineering using performance diagnostics and for network bandwidth allocation to alleviate congestion.

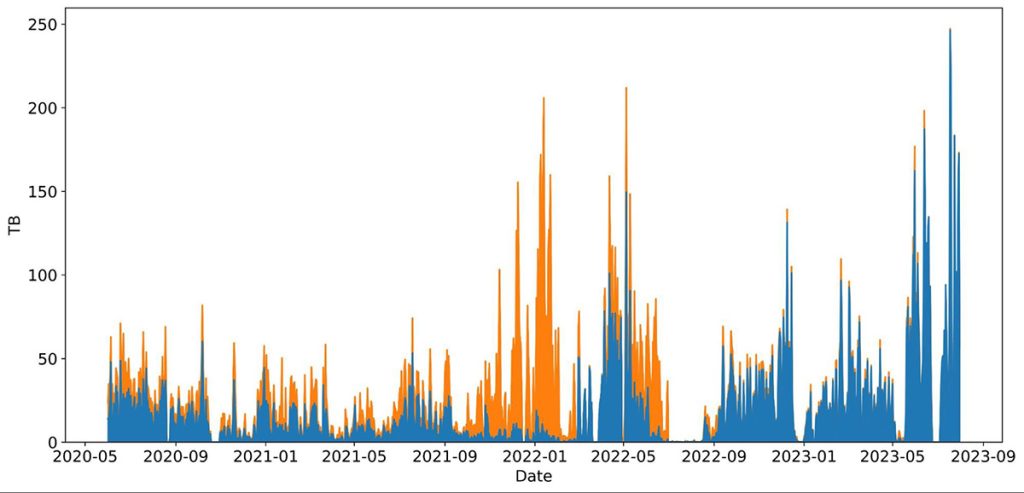

In CY2023, ESnet collaborated with the U.S. team working with the Compact Muon Solenoid (CMS) detector at CERN’s Large Hadron Collider (LHC) and the Open Science Data Federation (OSDF) to analyze network utilizations and characteristics using five ESnet-deployed caching nodes across Europe and the U.S. The pilot demonstrated the potential for significant bandwidth savings by mitigating redundant data movements. Moreover, the project revealed the possibility of dynamically rerouting network traffic based on predicted data movement patterns, further optimizing network performance and alleviating congestion.

DTN-as-a-Service (DTNaaS)

ESnet’s DTN-as-a-Service (DTNaaS) model explores the concept of a managed data-movement service platform that supports a well-defined pool of transfer software images. The goal of this model is for it to “just work” across a known infrastructure footprint, and in particular to include features to manage DTN configuration and tuning in a reproducible and scalable manner. The project has initially focused on a prototype controller implementation and automated packaging of DTN transfer software (e.g., Globus). At the same time, developing a more general framework to accommodate many types of software containers that require flexible deployment strategies has now become an equally important objective.

These requirements have resulted in the development of a DTNaaS controller called Janus. This lightweight orchestration framework is built around exposing container configuration and tuning, specifically for high-performance data mover applications. By specializing the feature set of the framework, Janus reduces complexity and offers a path to rapid, optimized deployment for common DTN hardware patterns. Importantly, Janus addresses a common DTN requirement for multiple, high-speed network attachments in containers with IPv4/IPv6 dual-stack configurations.

In 2023, Janus and its realization of DTNaaS development enabled orchestration of DELERIA software containers for the IRI testbed clusters (see page XX), and a Janus service layer was integrated into the FABRIC Software Federation effort (see below) to allow for DTN software deployments on provisioned testbed virtual machines.

Supporting Leading-Edge Testbeds

A testbed provides a controlled environment in which researchers, developers, and/or engineers can conduct experiments, assess performance, identify issues, and make improvements without affecting the production environment. ESnet operates several testbeds in partnership with DOE and academic researchers.

FABRIC: A National Experimental Research Infrastructure

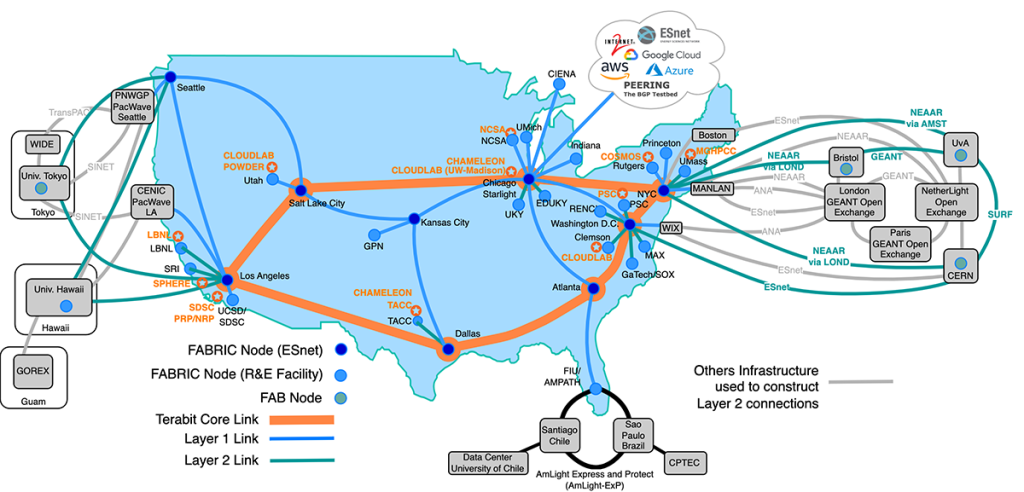

Funded by the National Science Foundation’s (NSF’s) Mid-Scale Research Infrastructure program, FABRIC is a novel adaptive programmable national research network testbed that allows computer science and networking researchers to develop and test innovative architectures that could yield a faster, more secure Internet, and advanced domain science workflows. FABRIC collaborators include the University of North Carolina Renaissance Computing Institute (RENCI), the University of Illinois Urbana Champaign, the University of Kentucky, Clemson University, and ESnet.

The four-year construction phase for the national-scale testbed was completed in 2023 and has transitioned to a new grant, FABRIC Operations – Accelerating Innovation and Research. ESnet staff will continue to lead the Scientific Advisory Committee and manage the colocation hosting and associated physical infrastructure, as well as Layer 1 connectivity operations. FABRIC integrates networking, compute, and storage into a unique type of cyberinfrastructure. It includes 30 sites within the USA and international sites at CERN, University of Bristol, University of Amsterdam, and University of Tokyo. In 2023, ESnet completed installation of the core network sites and transponders connecting FABRIC nodes as well as providing for the 1.2 Tbps dataplane.

In 2023, ESnet also completed development of a custom network control plane tailored for FABRIC research activities. This is a key part of the FABRIC infrastructure, allowing science-driven research such as the University of Chicago ATLAS team’s work to build a new LHC data-caching workflow using the FABRIC infrastructure at CERN and other locations. ESnet also led the development of the FABRIC Federation Software Extension stack that provides the toolkit for FABRIC users to run large experiments across multiple testbed and cloud providers.

The ESnet Testbed

The ESnet testbed consists of a set of resources, networking, computing, and storage made available to all to experiment with new technologies, concepts, and software that is relevant to the DOE mission and the broader R&E community. Those resources are currently located primarily in Berkeley, with a dedicated 400 Gbps network connection to ESnet’s rack at the Starlight facility in Chicago. This footprint extends to the ESnet network at Sunnyvale, California, as well as at Starlight.

In 2023, the ESnet testbed supported several experimentations, internal and external to ESnet, including:

- The Naval Research Laboratory (NRL) ran Remote Direct Memory Access (RDMA) data transfer over a high-latency network using the dedicated link to Starlight and the connection with the iCAIR testbed. NRL used this infrastructure to demonstrate 400 Gbps RMDA transfer to the SC23 show floor in Denver.

- SciStream, led by Argonne National Laboratory staff, used the ESnet testbed for testing within a high-latency network as well as for testing within simulated facilities. SciStream aims to provide a generic framework for scientific workflows that will stream data instead of transferring files.

- ESnet also committed resources to a successful Small Business Innovation Research proposal led by Argonne and Kirware aiming to provide an accurate model of networking testbeds. The collaboration will start in 2024.

The IRI Testbed

Coauthored by ESnet and OLCF staff and published in late 2023, the Federated IRI Science Testbed (FIRST) white paper proposed a progressive design-experiment-test-refine cycle to establish a shared environment for IRI. It calls for developers and pilot application users to come together and advance the overall vision by experimenting with the design patterns and addressing the gaps identified in the IRI Architecture Blueprint Activity Final Report.

ESnet supports the IRI Testbed by providing the focused network capabilities to link participating facilities. In 2023, ESnet collaborated with Oak Ridge National Laboratory on the initial IRI testbed design to connect the ORNL Advanced Computing Ecosystem testbed with the ESnet Testbed. In addition, two initial IRI-related projects were identified to be deployed on this infrastructure: DELERIA and EJFAT

The Quantum Application Network Testbed for Novel Entanglement Technology (QUANT-NET)

Funded by DOE ASCR Research in 2021, the Quantum Application Network Testbed for Novel Entanglement Technology (QUANT-NET) project is led by dual-appointment researchers at ESnet and Berkeley Lab working with partners from University of California, Berkeley (UC Berkeley), the California Institute of Technology, and the University of Innsbruck. The team is building a three-node distributed quantum computing testbed between two sites, Berkeley Lab and UC Berkeley, connected with an entanglement swapping substrate over optical fiber and managed by a quantum network protocol stack.

In 2023, the QUANT-NET research team made significant progress. Some highlights:

- Design and implementation of the quantum testbed infrastructure, including the fiber construction between UC Berkeley and Berkeley Lab and outfitting a quantum lab at Berkeley Lab.

- The design and development of the trapped-ion Q-node is in progress.

- The Quantum Frequency Conversion design has been completed; a prototype is under evaluation.

- A color-center single photon source has been developed. Indistinguishable photon generation using color centers in silicon photonics has been successfully demonstrated.

- The initial design of quantum network architecture and protocol stack has been completed; control plane development is in progress.

- The initial design of the quantum network real-time control framework has been completed.

Integrating Distributed Scientific Workflows

Instrument, compute, storage, and network resources have always been seen as distinct and, in most cases, have been managed separately. These administrative boundaries often lead to technical implementations and deployments that create obstacles when building a widely distributed science workflow. To name a few, these obstacles include incongruous Authentication/Authorization mechanisms and incompatible compute run-time environments. ESnet staff are working to develop solutions that can seamlessly integrate instruments, compute, storage, and network resources in order to reduce inefficiencies in large-scale distributed workflows and to accelerate the realization of the DOE’s IRI vision.

Gamma Ray Energy Tracking Array (GRETA)

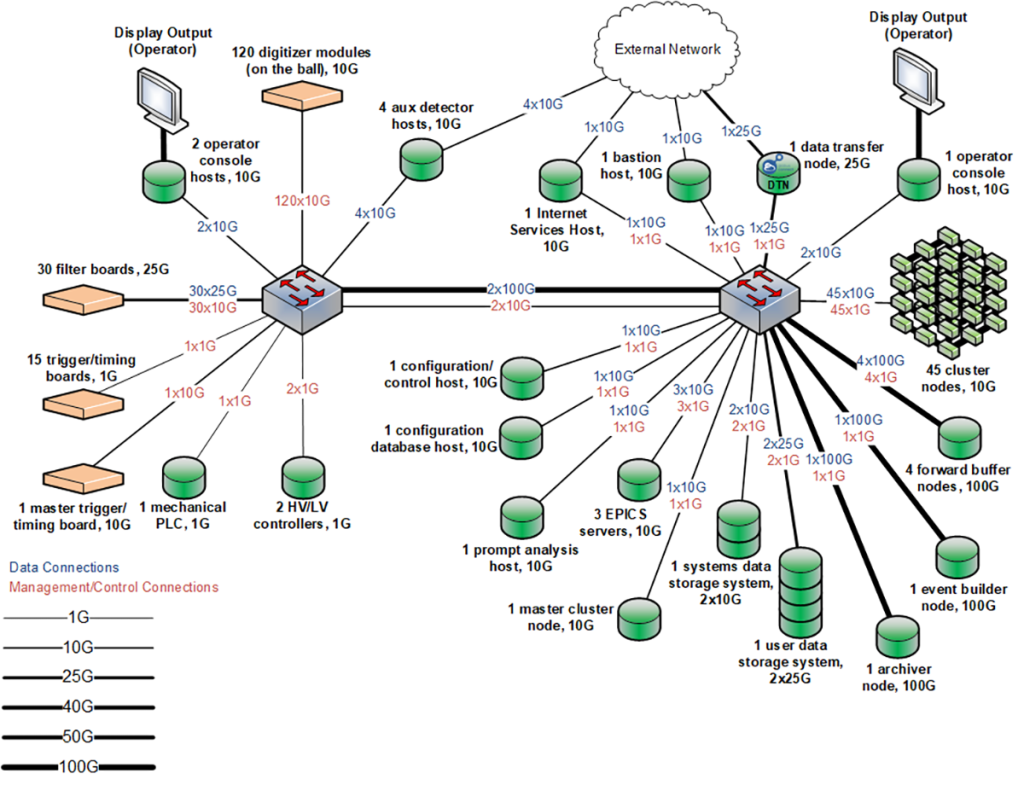

Led by Berkeley Lab, GRETA (Gamma Ray Energy Tracking Array) is a 413.3 project funded by the DOE Office of Science’s Nuclear Physics program, in collaboration with Oak Ridge, Argonne, and the Facility for Rare Isotope Beams (FRIB), and being constructed at Michigan State University. GRETA represents a major advancement in developing γ-ray detector systems: it can provide order-of-magnitude gains in sensitivity compared to existing arrays and will play an essential role in advancing the field of low-energy nuclear science.

ESnet supplies the architecture (see figure) for the computing environment that supports the GRETA experiment operation and data acquisition. Staff are also developing multiple key high-performance software components of the data acquisition system: the Forward Buffer, the Global Event Builder, the Event Streamer and the Storage Service. ESnet also provides system architecture, performance engineering, networking, security, and other related expertise to GRETA.

In 2023, during GRETA’s fabrication and integration phase, ESnet staff developed new features not initially planned and new simulations, including a new visualization software measuring and displaying the energy levels of the detectors. ESnet also developed a new alert system for detecting anomalies during the operation of the instruments.

DELERIA: Extending Data Pipelines over WANs

Rapid advances in the performance of standard IP networks have opened up exciting new possibilities in both the readout and online analysis of data from physics detectors. The approaches in development could allow nuclear physics experiments to perform near real-time processing using DOE high-performance computing facilities, reducing cost and effort. This allows scientists to adjust the instruments as the experiment progresses. With higher computational capabilities, ML/AI can be utilized to automate tuning of the detectors.

The DELERIA (for Distributed Event Level Experiment Readout and Analysis) project, funded by the DOE’s Laboratory Directed Research and Development (LDRD) program, aims to explore readouts of composable software components that include protocol translation, analysis, event building, and storage over WANs with online analysis running at leadership computing facilities. To accomplish this, DELERIA will generalize existing software recently developed for the GRETA project, which explored these ideas in the context of local area networks.

In 2023, DELERIA researchers ported the GRETA computing pipeline onto the ESnet Testbed infrastructure. They developed an orchestrator that supports the ESnet JANUS container management system and is capable of orchestrating the pipeline across multiple facilities. A new signal-decomposition component was developed with a plugin architecture allowing for different algorithms to be tested.